Vibe Coding Our Way to Disaster

Why Rich Hickey's Principles Matter More Than Ever

In 2010 and 2011, Rich Hickey, the creator of Clojure, gave two talks that shaped how many developers think about software design. His talks have only become more relevant since then, as we are now being inundated with AI-written code.

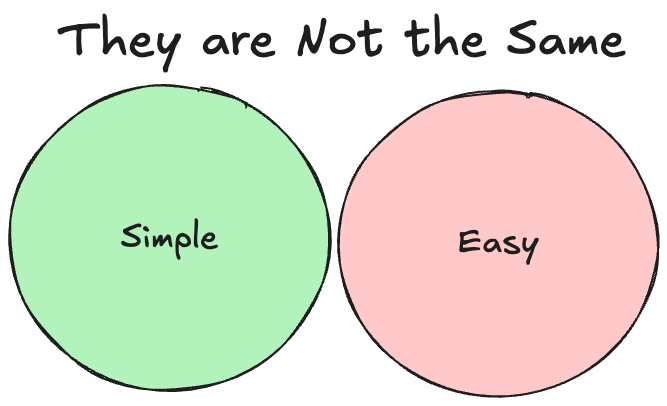

Simple Made Easy distinguishes between simplicity (things that aren't intertwined with other things) and ease (things that are familiar or quick to use). The distinction matters because we often choose easy solutions that create complex, tangled systems.

Hammock-Driven Development advocates deep, unhurried thinking about problems before writing code. Hickey argues that most critical bugs actually result from misunderstanding the problem rather than implementation errors.

As I've worked with coding agents over the past year, I keep returning to these principles. They provide a framework for understanding why some AI-assisted development results in high-quality software, while others create a complex mess. The key insight: AI tools amplify whatever approach you bring to them. If you rush to code without understanding the problem, AI helps you build the wrong solution. If you think deeply first, AI becomes a powerful tool for implementing well-considered solutions.

Simple vs. Easy

Hickey makes a distinction between simple and easy that at first sounds pedantic. Simple meaning: a single braid, one responsibility, or one concept that doesn't touch anything else. Easy, meaning the thing you reach for automatically. The familiar choice. The solution you can implement without a design doc. The easy approach seduces you because it asks nothing of you in return for using it. The definition of simple really has no overlap with easy, but it is a common idea that simple things are easy, which is not always the case.

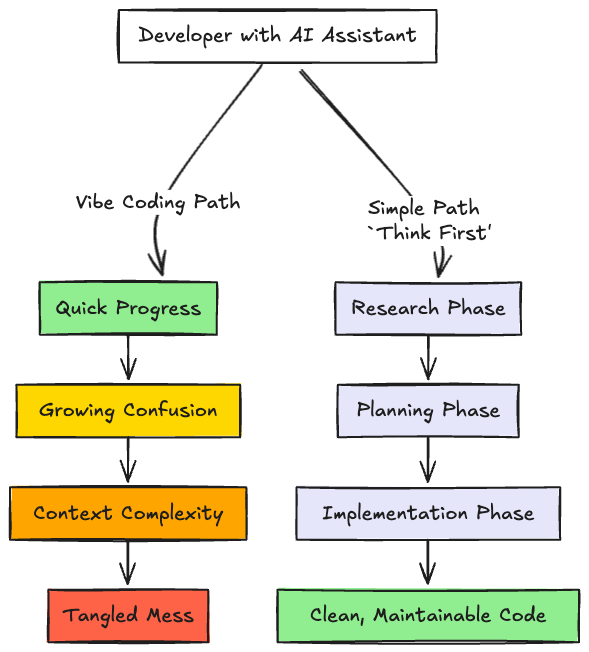

This distinction becomes clearer today when using AI coding agents. The easy approach is to open a chat and start vibe coding your app, iterating through conversation after conversation. It feels natural and productive. The AI responds quickly, code appears, and you can see immediate progress.

But this easy path comes at the cost of a special kind of complexity: context complexity. As conversations grow longer, the AI's context window fills with corrections, clarifications, searches, tool invocations, and evolving requirements. You are stacking complexity without realizing it. Earlier thoughtful design decisions get buried under the frustration of revisions. The AI starts making connections between these unrelated parts of the conversation, and your focused task dissolves into partial solutions and conflicting approaches, each one 'fixing' the problems created by the last.

Vibe coding is explicitly choosing ease over simplicity, just as Hickey warned. The comfortable, conversational interface of AI assistants makes it tempting to skip the hard work of clearly defining what we're building. We let the software evolve organically, which feels productive but inevitably creates exactly what Hickey calls "complected" (unnecessarily intertwined) systems. Our context complexity becomes code complexity, and every clarification and correction in the chat history gets woven into the implementation.

The Lost Art of Thinking Deeply

'Hammock-Driven Development' presents an idea that has influenced the way I work, even before working with AI tools. Rich Hickey argues that the most critical software bugs arise from misunderstanding the problem itself, not from implementation errors.

He demonstrates how our best solutions emerge from harnessing our brain's natural creative process. The analytical mind specializes in gathering information and critiquing ideas, but it gets trapped in familiar patterns of problems it has previously solved. The background mind synthesizes and connects ideas in new ways, but only when we give it space to work. You can't force it to work.

Hickey's approach maps directly onto the four stages of creative thought, a framework from Graham Wallas's work in the 1920s:

Preparation: Loading up your analytical mind with information. In software, this means understanding the problem domain, exploring existing solutions, and identifying constraints. Hickey emphasizes that we often rush this stage, but it's the foundation for everything else. You need to gather the raw material before your brain can do anything useful with it.

Incubation: Stepping away from the keyboard. Your background mind processes the information you've gathered, making connections your conscious mind wouldn't make. This is why solutions often come during walks, showers, or lying in a hammock. The key is to actually step away after thorough preparation. (This can also show up as having nightmares about JavaScript)

Illumination: The eureka moments when connections suddenly become clear. These insights feel like they come from nowhere, but they're actually the result of your background mind's processing during incubation. Hickey notes these moments often reveal simpler solutions than what your analytical mind was initially pursuing.

Verification: Testing whether the insight actually works. This brings the analytical mind back into play, but now it's working with a fundamentally different approach born from synthesis rather than incremental analysis. Not every insight survives verification, but the ones that do often solve the real problem.

Upfront Thinking -> Context Engineering

This focus on disciplined thinking led me to a revelation about AI tools. As I've worked with coding agents over the last few months, I noticed that the most successful agent runs were not from writing the best prompts, but from applying Hickey's preparation phase upfront. This realization led me to develop what I at the time calledThe Context Compression Funnel - later coined by others as Context Engineering (a much more marketable term, let's be honest). Context engineering is the discipline of designing what an AI sees before it responds - not just the prompt, but all the supporting information, tools, and structure needed to get reliable results.

The core insight is that we should treat AI agents not as conversational partners but as powerful tools whose context must be carefully managed. Just as Hickey advocates for choosing simple constructs over complex ones, we need to opt for simple interaction patterns that provide context upfront over the ease of conversational threads.

A Structured Approach: The Three-Phase Methodology

So how do we apply Hickey's principles to AI development? I've found success with three distinct phases that force us to think before we code. This approach directly applies Hickey's principles to prevent the complexity that naturally emerges from unstructured AI interactions.

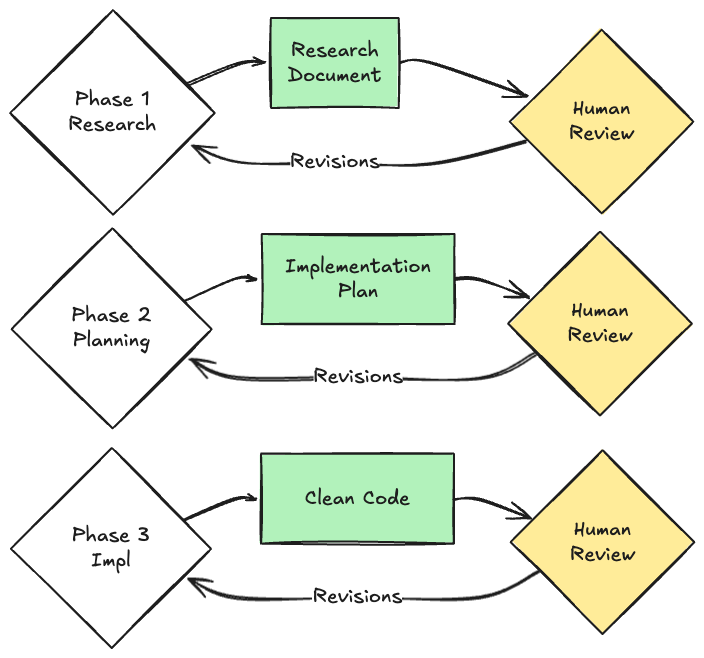

Phase 1: Research as Problem Understanding

The first phase treats the AI as a research assistant focused solely on understanding the problem space. Given a high-level goal, the AI explores the codebase to identify all relevant components. The output is a research document that maps the terrain we'll be working in.

This phase embodies Hickey's emphasis on thoroughly understanding the problem before attempting solutions. The AI can quickly traverse large codebases, identifying dependencies and relationships that provide crucial context. For instance, discovering that what seemed like a simple UI change actually touches three different API endpoints and a shared state manager. But the key to thinking deeply is that we stop here and review this research before proceeding.

The human review at this checkpoint represents the highest-leverage intervention in the entire process. By validating the AI's understanding of the problem space early on, we prevent cascading errors that would be far more costly to fix later. This is our "waking mind" actively framing the problem correctly.

Phase 2: Planning as Design Thinking

With the validated research in hand, the second phase asks the AI to create a detailed implementation plan. This plan should be so explicit that any developer could follow it - specifying which files to modify, what functions to create, and how components will interact.

This phase directly implements the principle of separating concerns. By distinguishing the "what" (the plan) from the "how" (the implementation), we create a simple, reviewable specification. The plan itself becomes a design artifact that embodies our thinking about the solution.

The human review step is the opportunity for you to do a design review. We're not looking at code yet; we're just evaluating the approach. Does this plan maintain proper separation of concerns? Are we tangling together things that should remain separate? This is where we apply what Hickey calls our "entanglement radar" - spotting when things are getting unnecessarily intertwined.

Phase 3: Implementation as Execution

Only after validating both our understanding and our plan do we move to implementation. This is where all of the upfront work pays off: the AI now has a clear specification to follow, dramatically reducing the chance of drift or confusion.

This final phase becomes pleasingly straightforward. Because we've already done the hard work of thinking and planning ahead of time, the AI can focus on translating the plan into code. The context remains clean and focused, preventing the accumulation of complexity that inevitably strangles long conversational sessions.

Building on Hickey's Foundation

Hickey's emphasis on simplicity becomes even more valuable when working with AI agents. Their power and ease of use make it easy to create new applications faster than ever, but these vibe-coded applications are a mess of complexity (and hilarious security issues). By structuring our interactions to maintain simplicity, we harness AI's capabilities while avoiding its pitfalls.

The discipline of "hammock time" thinking is translated into our phased approach. We're building in moments of reflection and review, ensuring that human judgment guides the process. The AI amplifies our capabilities, but the crucial thinking work remains fundamentally human.

More importantly, we're maintaining focus on solving problems rather than just producing code. Hickey argued that we should think more about the problem space than the solution space. Our methodology ensures that two-thirds of the process (research and planning) is dedicated to understanding and designing before any implementation begins.

Thoughtful AI-Assisted Development

As AI coding assistants become more powerful and prevalent, the temptation to use them in "easy" ways will only grow stronger. We'll see tools that promise even more magical transformations from description to implementation. But Hickey's warnings about choosing ease over simplicity will remain relevant.

The methodology I've outlined goes beyond productivity with AI tools. At its core, it maintains the discipline and thoughtfulness that creates maintainable, understandable systems. It recognizes that the hard work of thinking can't be outsourced to AI, only amplified by it.

I encourage you to experiment with this approach in your own AI-assisted development. Start by being deliberate about the phases of Research, Planning, and Implementation. Resist the temptation to jump straight into code generation. Permit yourself to spend time thinking. You might find, as I have, that slowing down at key moments actually speeds up the overall process while producing dramatically simpler results.

AI assistants are here to stay in software development. We must choose to do the work to build elegantly simple systems rather than succumb to the ease of vibe-coded complexity. Hickey gave us the principles. Now we need the discipline to apply them before we are buried in slop.

I think you would enjoy this paper

* https://pages.cs.wisc.edu/~remzi/Naur.pdf

It's Peter Naur's classic paper on programming as an exercise in building a theory, which he wrote 40 years ago and seems more relevant than ever.

Insightful post thanks, everything you wrote is highly relatable.